-

Planning for Evaluation

SEIR*TEC Home | About SEIR*TEC | Partners | SEIR*TEC Region |

Search | Site Map

Steps in Evaluating a School or District Technology Program

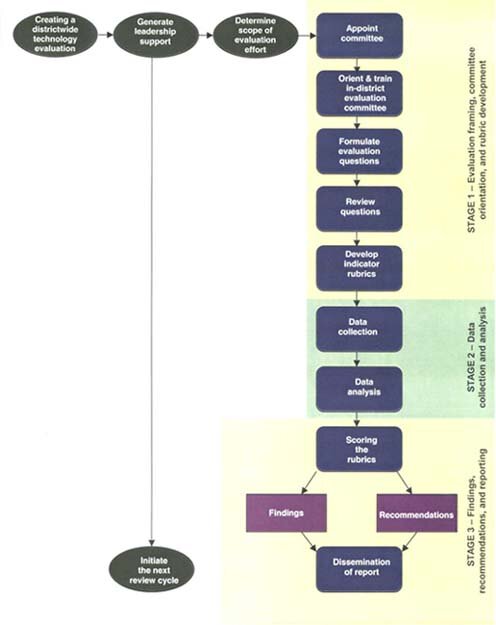

Sun Associates—an educational consulting firm and frequent SEIR*TEC collaborator—has worked with a number of school districts to develop and facilitate formative evaluations of technology's impact on teaching and learning. Through several years of working with districts to create research-based, formative evaluations to measure a district's progress toward meeting its own strategic goals for technology implementation and integration the process most often employed by districts consist of three interrelated stages: evaluation framing, data collection, and reporting.

Stage 1—Evaluation framing, committee orientation, and rubric development

Just as with technology planning, technology evaluation is a committee-driven process. Therefore, the first step in this process is for the district to appoint an evaluation committee composed of district stakeholders such as teachers, administrators, parents, board members, and students. The exact composition varies and reflects the values and priorities of the district that this conducting the evaluation. Once the committee is selected, we facilitate a full day of training for the committee. During this training, the entire evaluation process is overviewed, milestones are set, and initial responsibilities are assigned.

After it initial day of training, the committee meets for another two days to develop the district's key evaluation questions and to create indicators for those question. While the developed indicators are always tied directly to the district's own strategic vision and goals for technology, we also key the indicators to standards and frameworks such as the National Educational Technology Standards (NETS) for students and Milken's Seven Dimensions, as well as, local and state curriculum frameworks.

In most cases, the evaluation committee breaks into subcommittees to develop indictors for individual questions. Once the indicators have been developed and approved by the district committee, we organize all of this work into a set of indicator rubrics. These rubrics (see www.sun-associates.com/eval/sample.html for examples) form the basis for the district's evaluation work.

Stage 2—Data collection and analysis

Data collection is designed in response to the district's evaluation rubrics. Data are gathered that will enable the district to answer the evaluation questions and its performance on its evaluation rubric. Typically, a data collection effort will include:

-

Surveys of teachers, administrators, students, and/or community members. Unique surveys are created for each target population and are based on the data collection needs described in the district's rubrics.

- Focus group interviews of teachers, administrators, students, technology staff, and other groups of key participants in the district's educational and technology efforts.

- Classroom observations. External evaluators will typically spend time in schools and classrooms through out the district. The evaluators not only oversee teachers and students using technology but also find that we can learn much about how technology is being used to impact teaching and learning just by observing classroom setups, teaching styles, and student behaviors.

It is important that the data-collection effort not rely on a single data source (e.g., surveys). The district needs to design a data-collection strategy that has the optimum chance of capturing the big picture of the use and impact of technology within the district. This will require the simultaneous use of multiple data-collection strategies.

Stage 3—Findings, recommendations, and reporting

Reporting is important to a formative evaluation in that it establishes a common base for reflection. An evaluation that is not shared with the community it evaluates never results in reflection. Reflection is necessary for positive and informed change. The first step in reporting is to take the data gathered in the previous stage to score the district's performance against its own rubrics.

These scores—along with a detailed explanation of how scores were given—form the basis of the report. In addition, reports typically contain detailed findings and recommendations. The recommendations relate to how the district can adapt or change current practices to achieve higher levels of performance in succeeding years. The recommendations are always based on a research-intensive knowledge of best practices as related to teaching, learning, and technology. Recommendations are relative to findings. In other words, recommendations are in sync with a district's desired outcomes as documented in its indicator rubrics.

In most cases, evaluation projects end with a formal presentation to the district committee and other audiences as identified by the overseeing administrator. The districts then distribute the document and begin implementation. This is the point at which the next review cycle begins .

These steps for evaluating a technology plan are appropriate for most schools and districts. As the chart indicates, this is a cyclic process for continuous improvement and for greater impact on students and the educational program.

—by Jeff Sun, Director

Sun Associates

Originally printed in SEIR*TEC NewsWire Volume Five, Number Three, 2002